Superconducting Quantum Computer Scalability Explained: From Qubits to Fault-Tolerant Systems

2026.02.06 · Blog superconducting quantum computer scalability

Understanding Superconducting Quantum Computer Scalability

What Scalability Means in Quantum Computing

In classical computing, scalability typically refers to adding more processors or memory to improve performance. In quantum computing, scalability has a deeper and more complex meaning. It involves the ability to increase the number of usable qubits while maintaining control accuracy, system stability, and computational reliability. For superconducting quantum computers, scalability determines whether the technology can move beyond laboratory demonstrations toward solving real-world problems.

Superconducting quantum computer scalability is not only about growing qubit counts. It also includes maintaining coherence, ensuring high-fidelity quantum gates, managing control electronics, and integrating software and hardware into a unified system. Each added qubit increases system complexity exponentially, making scalability the defining challenge for the entire field.

Why Scalability Is the Central Challenge for Superconducting Quantum Computers

Superconducting quantum computers are among the most promising quantum technologies due to their fast gate speeds, compatibility with semiconductor fabrication, and mature microwave control techniques. However, as systems scale, noise, crosstalk, and engineering overhead become harder to manage. Without scalable architectures, even powerful small-scale devices remain limited to experimental use.

Scalability directly impacts whether superconducting quantum computers can support advanced algorithms, quantum error correction, and long-term industrial applications. This makes it the primary benchmark for progress in superconducting quantum computing.

Scalability vs Performance: Why More Qubits Alone Is Not Enough

A system with many qubits but poor coherence or low gate fidelity may perform worse than a smaller, well-engineered device. True superconducting quantum computer scalability balances qubit quantity with quality. Performance metrics such as coherence time, gate accuracy, and system uptime must scale together. Otherwise, additional qubits introduce more errors than computational value.

The Building Blocks of Scalable Superconducting Quantum Systems

Superconducting Qubits: Foundations of Scalable Quantum Hardware

Superconducting qubits are artificial atoms fabricated using lithographic techniques similar to those in modern semiconductor manufacturing. Their compatibility with established fabrication processes makes them attractive for scalability. However, achieving uniform performance across large qubit arrays requires precise control over materials, geometry, and fabrication processes.

Scalable superconducting quantum systems rely on standardized qubit designs that can be reproduced reliably. This standardization is a prerequisite for moving from handcrafted prototypes to engineering-grade quantum hardware.

Qubit Coherence Time and Its Impact on System Growth

Coherence time measures how long a qubit can preserve its quantum state. As superconducting quantum computers scale, maintaining long coherence times becomes increasingly difficult due to electromagnetic interference, material defects, and environmental noise.

Longer coherence times allow deeper quantum circuits and more complex algorithms. For scalable systems, coherence must not degrade significantly as qubit count increases. This requirement drives innovation in materials science, chip packaging, and system-level shielding.

Gate Fidelity as a Prerequisite for Large-Scale Quantum Circuits

Gate fidelity refers to how accurately quantum operations are executed. High-fidelity gates are essential for scalable superconducting quantum computers because errors accumulate rapidly as circuit depth increases. Without sufficient fidelity, scaling becomes impractical regardless of qubit count.

Engineering scalable systems requires not only improving individual gate performance but also ensuring consistency across thousands of operations and multiple qubits.

From Few-Qubit Devices to Multi-Qubit Architectures

Early-Stage Superconducting Quantum Computers

Early superconducting quantum computers typically consisted of only a few qubits, often optimized for proof-of-concept experiments. These systems demonstrated fundamental quantum phenomena but were not designed for scalability.

The transition from early-stage devices to scalable platforms requires rethinking architecture, layout, and system integration from the ground up.

Challenges in Scaling from 10 to 100 Qubits

Scaling from tens to hundreds of qubits introduces challenges such as increased wiring density, signal interference, and calibration complexity. Each additional qubit requires control lines, readout channels, and calibration routines, all of which must operate reliably at cryogenic temperatures.

Effective superconducting quantum computer scalability depends on modular architectures that simplify expansion while preserving performance.

Engineering Bottlenecks in Large Qubit Arrays

As qubit arrays grow, physical layout constraints become more pronounced. Crosstalk between neighboring qubits, signal routing congestion, and thermal management all limit scalability. Addressing these bottlenecks requires careful chip design, advanced packaging techniques, and system-level optimization.

Control, Readout, and System-Level Scalability

Quantum Control and Measurement at Scale

Quantum control systems generate microwave pulses to manipulate qubits, while readout systems measure their states. As superconducting quantum computers scale, these subsystems must handle increasing channel counts without compromising precision.

Scalable control architectures prioritize synchronization, low noise, and automation to reduce operational complexity.

The Role of Quantum Control and Measurement Systems

Control and measurement electronics form the interface between classical and quantum domains. Their scalability directly affects overall system performance. Integrated control systems reduce latency, improve signal integrity, and simplify deployment of large-scale quantum computers.

Reducing Crosstalk and Noise in Dense Qubit Layouts

Crosstalk and noise increase as qubit density grows. Effective isolation strategies, optimized signal routing, and improved filtering are essential to ensure reliable operation in scalable superconducting quantum systems.

Cryogenic Infrastructure and Scalability Constraints

Why Ultra-Low Temperature Environments Matter

Superconducting qubits operate at temperatures close to absolute zero to maintain superconductivity and quantum coherence. Cryogenic systems are therefore fundamental to superconducting quantum computer scalability.

Cryogenic Wiring and Thermal Load Challenges

Each control and readout line introduces thermal load into the cryogenic environment. As systems scale, managing this thermal load becomes a major constraint. Innovative wiring strategies and cryogenic-compatible components help mitigate these limitations.

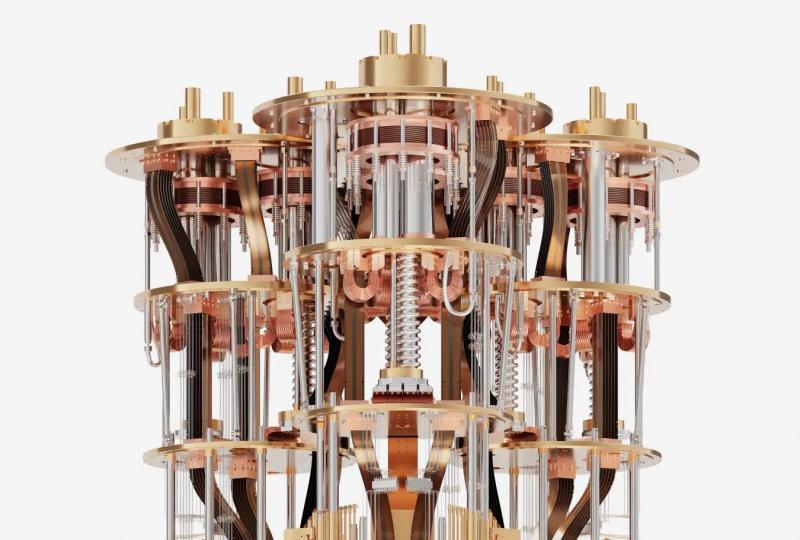

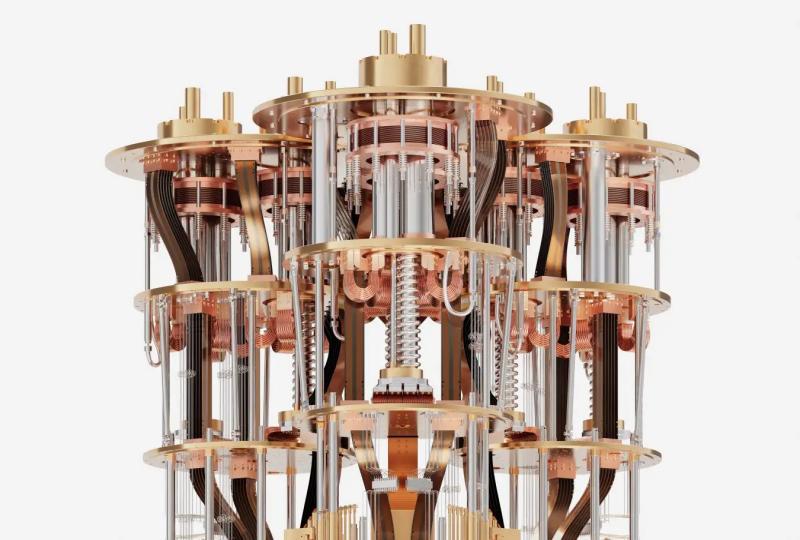

System Integration Inside Dilution Refrigerators

Integrating large quantum systems inside dilution refrigerators requires careful mechanical, thermal, and electromagnetic design. Scalable integration ensures stable operation and simplifies maintenance.

Quantum Error Correction as the Path to Fault-Tolerant Systems

Why Error Correction Defines True Scalability

Without quantum error correction, superconducting quantum computers remain vulnerable to noise and decoherence. Error correction transforms noisy physical qubits into reliable logical qubits, enabling fault-tolerant computation.

Logical Qubits vs Physical Qubits

Scalable systems require many physical qubits to create a single logical qubit. This overhead makes scalability even more critical, as hardware resources must grow efficiently to support practical computation.

Surface Codes and Their Hardware Implications

Surface codes are among the most promising error correction schemes for superconducting quantum computers. Implementing them at scale requires precise qubit layouts, high-fidelity operations, and fast feedback loops.

Software and Architecture Considerations for Scalable Quantum Computing

Quantum Programming Frameworks and Hardware Abstraction

Scalable quantum systems require software frameworks that abstract hardware complexity. This allows researchers and developers to focus on algorithms rather than device-specific details.

Hardware-Software Co-Design for Scalable Systems

Effective superconducting quantum computer scalability relies on hardware-software co-design. Control electronics, firmware, and programming frameworks must evolve together to support system growth.

Hybrid Quantum-Classical Workflows

Near-term scalable systems often operate in hybrid quantum-classical environments. Efficient integration with classical computing resources enhances overall system usability and performance.

Industrialization of Superconducting Quantum Computer Scalability

From Laboratory Prototypes to Engineering Systems

Industrial-scale quantum computing requires moving beyond experimental setups. Engineering-grade superconducting quantum computers emphasize reliability, repeatability, and standardized deployment.

Standardization and Repeatable Manufacturing

Scalability depends on the ability to manufacture quantum components consistently. Standardized chip designs, testing protocols, and quality control processes enable reliable system expansion.

Deployment Models: On-Premise vs Private Quantum Cloud

Different deployment models address varying scalability needs. On-premise systems support data-sensitive applications, while private quantum clouds enable shared access to scalable quantum resources.

How SpinQ Advances Superconducting Quantum Computer Scalability

Integrated Superconducting Quantum Hardware Solutions

SpinQ focuses on integrated superconducting quantum hardware that combines quantum chips, control systems, cryogenic infrastructure, and software into a cohesive platform. This integration simplifies scalability by reducing system fragmentation.

Engineering-Oriented Design for Scalable Quantum Systems

By emphasizing engineering reliability and system-level optimization, SpinQ supports scalable superconducting quantum computers suitable for research institutions and industrial users. Its vertically integrated approach enhances control over performance and quality.

Supporting Research, Education, and Industrial Exploration

SpinQ's scalable quantum systems enable users to explore quantum algorithms, error correction, and application development in realistic hardware environments. This practical accessibility accelerates learning and innovation across multiple sectors.

The Road Ahead: From Scalable Systems to Practical Quantum Advantage

Near-Term Scalability Milestones

In the near term, progress in superconducting quantum computer scalability will focus on improving qubit coherence, gate fidelity, and system integration. These milestones pave the way for larger, more reliable systems.

Long-Term Outlook for Fault-Tolerant Superconducting Quantum Computers

Long-term scalability aims at fault-tolerant quantum computers capable of sustained, error-corrected operation. Achieving this goal will unlock transformative applications across science, engineering, and industry.

Conclusion: Why Superconducting Quantum Computer Scalability Matters

Superconducting quantum computer scalability is the defining factor that determines whether quantum computing can transition from experimental promise to practical impact. By addressing challenges across hardware, control, cryogenics, software, and error correction, scalable superconducting systems lay the foundation for fault-tolerant quantum computation.

With engineering-focused solutions and integrated system design, scalable superconducting quantum computers are steadily moving closer to real-world deployment. As this technology matures, it will reshape how complex problems are approached across research and industry, marking a critical step toward the future of quantum computing.

Featured Content