The Future of Superconducting Quantum Computer Manufacturers: Scalability, Error Correction, and Commercial Readiness

2026.02.06 · Blog superconducting quantum computer manufacturer

Introduction: Why Superconducting Quantum Computer Manufacturers Matter Now

Quantum computing has moved from theoretical promise to practical roadmaps, and superconducting quantum computer manufacturers are now at the center of this transformation. Over the last decade, advances in qubit design, cryogenic engineering, and quantum software have shifted the conversation from “if” to “when” quantum computers will impact real-world applications in chemistry, optimization, AI, and secure communications.

The market no longer views quantum computing as a distant research topic. Instead, enterprises, governments, and research institutions are actively evaluating vendors, pilot projects, and long-term hardware strategies. In this context, understanding how superconducting quantum computer manufacturers differ, how their technologies scale, and how close they are to commercial readiness has become a strategic priority for decision-makers.

Superconducting technology leads today’s quantum race because it combines well-understood fabrication processes with relatively high gate speeds and the ability to integrate with existing semiconductor ecosystems. While other modalities such as trapped-ion, photonic, and NMR-based quantum systems each have their strengths, superconducting qubits currently dominate many of the largest and most public hardware roadmaps. As a result, specialized manufacturers and full-stack quantum providers are emerging to serve different layers of the value chain, from chips and control electronics to cloud platforms and education solutions.

What Makes a Superconducting Quantum Computer Different

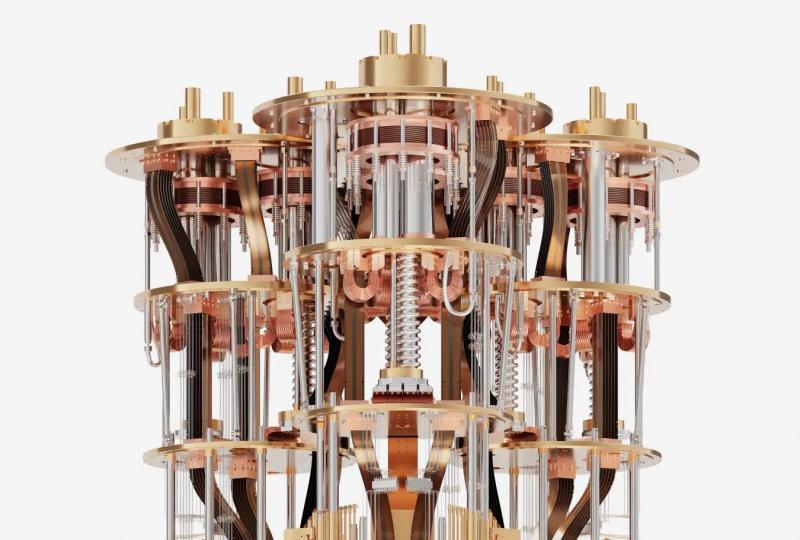

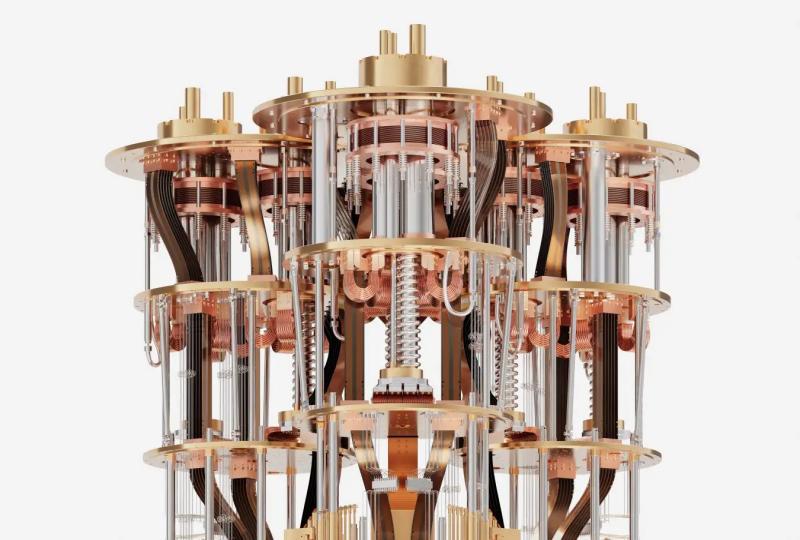

Superconducting quantum computers rely on qubits built from superconducting circuits, typically based on Josephson junctions, operated at millikelvin temperatures. At these extremely low temperatures, resistance drops to nearly zero and quantum effects at the circuit level can be harnessed to represent and manipulate quantum states. This approach leverages decades of know-how from cryogenic electronics, microwave engineering, and superconducting materials to build controllable qubits.

A typical superconducting quantum system contains several key components. At the heart is the quantum processing unit (QPU), where qubits are implemented on a chip fabricated in a cleanroom using processes related to, but distinct from, conventional CMOS technology. The QPU is placed inside a dilution refrigerator that cools the device to a few tens of millikelvin. Surrounding the QPU is the quantum control stack: microwave signal generators, arbitrary waveform generators, fast readout electronics, and classical processing units that orchestrate gates and measure outcomes. On top of this hardware stack sits the software layer—compilers, SDKs, and cloud interfaces—that exposes the quantum computer to users as a service.

Compared with other quantum modalities, superconducting platforms are often seen as more scalable in the near term because they use lithographic fabrication and can, in principle, integrate hundreds or thousands of qubits on a single chip. Approaches like trapped-ion and photonics have advantages in coherence and connectivity, but they can face different scaling challenges in control complexity or photonic integration. NMR-based quantum computers, an area where brands like SpinQ have been prominent, excel in robustness, accessibility, and education, but today’s NMR systems target different use cases and qubit counts than flagship superconducting devices. This diversity of hardware highlights why buyers must understand not only abstract qubit numbers but also the underlying technology and its scaling trajectory.

Global Landscape of Superconducting Quantum Computer Manufacturers

The current landscape of superconducting quantum computer manufacturers is anchored by several leading technology companies that have made long-term investments and public commitments. Large players such as IBM and Google have built and operated multiple generations of superconducting quantum processors, publishing roadmaps with target qubit counts, error rates, and milestones toward fault-tolerant computing. These companies combine hardware development with extensive software ecosystems, cloud access platforms, and partnerships with academic and industrial users.

Alongside these large companies, a growing set of specialist manufacturers and regional vendors have emerged. Firms such as IQM and Rigetti focus primarily on superconducting hardware and related services, often working closely with research institutions and governments. Their strategies may emphasize on-premises systems for national labs, co-design of algorithms and hardware for specific domains, or integration with existing HPC infrastructure. This layer of specialized manufacturers offers alternatives to the largest cloud-based platforms and can provide more tailored solutions for certain customer segments.

NMR-focused brands like SpinQ occupy a complementary position in a market dominated by superconducting platforms. Rather than directly competing on maximum qubit count in superconducting architectures, SpinQ and similar companies provide accessible quantum computers and solutions that prioritize reliability, ease of use, and education. These systems have been deployed in contexts such as K–12 education, university labs, and training programs, helping to build talent pipelines and quantum literacy. As superconducting systems become more commercially ready, such educational and entry-level systems can become important feeders into the broader quantum ecosystem, preparing users to later engage with large-scale superconducting hardware via cloud or on-premises deployments.

Scalability: From Dozens to Thousands of Qubits

One of the most critical questions for any superconducting quantum computer manufacturer is how their technology will scale from today’s devices, which typically feature tens to a few hundred qubits, to future systems with thousands or millions of qubits. Raw qubit count alone is not an indicator of performance, but it is a necessary ingredient for both near-term quantum advantage and long-term fault-tolerant computing.

Current superconducting systems face several engineering bottlenecks as they scale. First, coherence times—how long qubits maintain quantum information—must remain sufficiently high even as more qubits are added and wiring density increases. Crosstalk between qubits and control lines can degrade performance as the architecture becomes more complex. Second, the cryogenic infrastructure required to cool the QPU becomes larger and more intricate with increasing channel counts, placing practical limits on how many wires and control lines can be routed into the refrigerator.

Quantum chip design and packaging are therefore central to the scalability strategies of superconducting manufacturers. Approaches such as three-dimensional integration, flip-chip bonding, and modular architectures aim to decouple the scaling of qubit numbers from the physical constraints of wiring and cooling. Some roadmaps explore modular systems where multiple quantum chips are interconnected via quantum links, enabling larger logical systems assembled from smaller, more manageable units. This can reduce fabrication risk and simplify error management while still enabling large-scale processors.

Different manufacturers position their roadmaps according to their technical strengths and market goals. Large organizations with substantial R&D budgets often present multi-year plans that steadily increase qubit counts and target specific milestones in error rates and quantum volume. Smaller and more agile startups may focus on optimizing specific architectural features, such as tunable couplers, specialized qubit designs, or custom control electronics, to differentiate themselves. For buyers, examining these published roadmaps—and how consistently vendors hit their stated goals—provides valuable insight into which manufacturers are likely to deliver on long-term scalability.

Error Rates, Error Mitigation, and Error Correction

As qubit counts grow, error management becomes the defining challenge for superconducting quantum computers. Key metrics such as single-qubit and two-qubit gate fidelity, measurement accuracy, and decoherence times (often described in terms of T1 and T2) determine how complex an algorithm can be before noise overwhelms the computation. Crosstalk between qubits and control lines can introduce correlated errors that are especially problematic for scaling.

Today’s devices remain in the noisy intermediate-scale quantum (NISQ) regime, where full fault-tolerant quantum error correction is not yet feasible at meaningful scales. Manufacturers therefore invest heavily in error mitigation techniques that can improve the effective accuracy of computations without requiring the overhead of full error-correcting codes. These methods include circuit-level optimizations, probabilistic error cancellation, compiling strategies that minimize error-sensitive operations, and calibration routines that continually tune the hardware.

In parallel, vendors are working toward full quantum error correction (QEC), which will eventually allow for logical qubits composed of many physical qubits with dramatically reduced error rates. Achieving QEC requires reaching certain threshold error rates at the physical qubit level and implementing error-correcting codes such as surface codes or more specialized schemes. This transition to logical qubits is one of the central milestones in most long-term superconducting roadmaps and will determine when fully fault-tolerant quantum computers become practical.

Manufacturers also need to communicate their progress in error management clearly and credibly to enterprises, which may not be familiar with the nuances of quantum metrics. Some rely on composite indicators such as quantum volume, which combines several performance parameters into a single number. Others emphasize algorithm-specific benchmarks or demonstrator applications that show real-world relevance. Buyers should look beyond headline qubit counts and evaluate error metrics, mitigation strategies, and QEC roadmaps when comparing superconducting quantum computer manufacturers.

Commercial Readiness: From Lab Prototypes to Enterprise‑Grade Systems

Commercial readiness for superconducting quantum computers extends far beyond having a functioning QPU in a lab. Enterprise customers expect reliability, predictable performance, security, and service-level guarantees that match or complement their existing IT and HPC environments. For a superconducting quantum computer manufacturer, demonstrating this level of readiness involves hardware, software, operations, and ecosystem maturity.

Benchmarking frameworks play an important role in this process. Metrics such as quantum volume, circuit-layer operations per second, and algorithm-specific benchmarks allow vendors to characterize their systems in ways that are meaningful for users. When these benchmarks are combined with transparent documentation and independent validation, they provide a clearer picture of how a system will perform under realistic workloads.

Deployment models also matter. Some manufacturers provide access exclusively through cloud platforms, giving users a shared environment where they can run quantum workloads without managing the hardware themselves. Others offer on-premises systems, particularly for government laboratories, sensitive industrial applications, or regions with specific data sovereignty requirements. In many cases, a hybrid model emerges, where cloud services are used for exploration and development, while strategic partners deploy dedicated hardware for production or highly confidential research.

Brands that focus on education and entry-level systems, including those using NMR technology, contribute to commercial readiness by building a richer quantum ecosystem. Solutions that combine hardware, curriculum, and cloud access help train the next generation of quantum developers and users. As superconducting systems become more available, these educational platforms can serve as gateways, allowing users to start with accessible systems and later transition to more powerful superconducting hardware without a steep learning curve.

Choosing a Superconducting Quantum Computer Manufacturer

For organizations evaluating a superconducting quantum computer manufacturer, a structured decision framework is essential. One core criterion is the quality of the qubits themselves, including coherence times, gate fidelities, and stability over time. Vendors that openly publish and update these metrics provide greater transparency and allow customers to track progress. Another key factor is roadmap credibility—how well the manufacturer’s historical performance aligns with its published plans for new devices and improvements.

Ecosystem and developer support are equally important. Open-source toolchains, development kits, and support for widely used frameworks (such as those that interface with Qiskit, Cirq, or similar SDKs) make it easier for teams to prototype algorithms and integrate quantum capabilities into existing workflows. A strong ecosystem also includes partnerships with universities, integrators, and ISVs, as well as clear documentation, sample code, and training resources.

Total cost of ownership (TCO) should be considered across the entire lifecycle of engagement. This includes not only direct hardware costs or cloud usage fees, but also integration work, staff training, maintenance, and upgrades. For some organizations, starting with cloud-based access to shared superconducting systems may be more cost-effective and lower risk. Others—especially national labs or large enterprises with specialized needs—may justify the investment in on-premises systems and long-term vendor partnerships.

Different customer types will have distinct decision paths. Research labs and universities may prioritize openness, academic collaborations, and access to low-level hardware controls. Enterprise innovation teams may focus on domain-specific use cases, integration with existing HPC and AI infrastructure, and vendor support for co-developing proofs of concept. Governments and national labs may emphasize security, sovereignty, and strategic alignment with long-term national research programs. In every case, understanding the strengths and positioning of each superconducting quantum computer manufacturer is critical.

Use Cases Driving the Next Wave of Superconducting Quantum Systems

As superconducting quantum computers mature, certain application domains are emerging as early drivers of value. In materials science and chemistry, quantum devices can potentially simulate molecules and reaction pathways beyond the reach of classical methods, opening opportunities in catalysis, drug discovery, and advanced materials. Optimization problems in logistics, finance, and energy systems offer another promising area, where quantum algorithms may provide speedups or better solution quality under certain conditions.

Hybrid quantum-classical workflows are likely to be the dominant pattern in the near and medium term. In this model, quantum processors handle specialized subroutines, while classical HPC and AI infrastructure perform the bulk of the computation. Superconducting manufacturers that facilitate tight integration with existing data centers, cloud environments, and AI pipelines will have an advantage, as they can offer more practical and incremental paths to quantum-enhanced applications.

Education and talent development are also essential use cases in the broader quantum ecosystem. Brands that offer compact, reliable quantum systems—whether superconducting or NMR-based—play an important role in building quantum literacy at multiple levels, from K–12 through university and professional training. By providing hands-on access to quantum hardware and user-friendly software platforms, these solutions help generate the skilled workforce that future superconducting quantum computer manufacturers will rely on.

The Next Decade: Outlook for Superconducting Quantum Computer Manufacturers

Over the next decade, superconducting quantum computer manufacturers will likely focus on three intertwined goals: scaling qubit numbers, reducing error rates, and transitioning to logical qubits with full error correction. Achieving fault-tolerant quantum computing will require substantial progress on all three fronts, as well as advances in system architecture, materials science, and quantum software.

Industry structure is also expected to evolve. Consolidation through partnerships, acquisitions, and alliances may occur as companies seek to combine hardware, software, and domain expertise. Standards for interfaces, benchmarks, and security will become more important as quantum systems move closer to production environments. Manufacturers that actively participate in defining and adopting these standards may build stronger trust with enterprise and government customers.

For buyers, choices made today can have long-term implications. Selecting a superconducting quantum computer manufacturer is not only about current device specifications but also about aligning with a partner whose roadmap, ecosystem, and business model support the organization’s strategic goals. As the market matures, brands that bridge hardware, cloud access, and education—creating end-to-end pathways from initial learning to advanced deployment—are likely to play a disproportionate role in accelerating adoption.

Conclusion: Turning Roadmaps into Real‑World Quantum Advantage

The future of superconducting quantum computer manufacturers will be defined by their ability to turn ambitious technical roadmaps into reliable, commercially relevant systems. Scaling to larger qubit numbers, mastering error correction, and demonstrating stable performance under real workloads are all necessary steps toward practical advantage.

Organizations that start engaging now—through pilots, education programs, and ecosystem partnerships—will be better positioned to benefit as the technology matures. By carefully evaluating superconducting quantum computer manufacturers on their hardware quality, error management strategies, ecosystem strength, and long-term vision, decision-makers can build a quantum strategy that evolves alongside this rapidly advancing field.

Featured Content