Why Coherence Matters in Quantum Research: The 2025 Nobel Prize Context

2025.11.07 · Blog quantum coherence Nobel Prize

Quantum coherence represents one of the most fundamental yet fragile phenomena in quantum mechanics, and its preservation stands as the defining challenge in translating quantum science from theoretical elegance to practical technological implementation. The 2025 Nobel Prize in Physics, awarded to John Clarke, Michel H. Devoret, and John M. Martinis for demonstrating macroscopic quantum mechanical tunneling and energy quantization, fundamentally hinges on their achievement of maintaining quantum coherence in systems containing billions of particles—a breakthrough that redirected the entire trajectory of quantum computing research.

Understanding Quantum Coherence: The Foundation of Quantum Behavior

Quantum coherence refers to the ability of a quantum system to maintain a well-defined phase relationship among its constituent components, enabling it to exist in superposition—simultaneously occupying multiple states at once. This represents the essence of what distinguishes quantum mechanics from classical mechanics. In classical systems, an object occupies one definite state; in quantum systems, coherent objects exist in carefully balanced combinations of multiple states, each with its own amplitude and phase.

At the microscopic scale, electrons in atoms naturally exhibit quantum coherence, manifesting as their ability to occupy multiple orbitals simultaneously until measured. However, this coherence is extraordinarily delicate. The moment a quantum system interacts with its environment—through stray electromagnetic fields, thermal vibrations, or any form of environmental disturbance—it undergoes decoherence, transitioning from a pure quantum state to a classical statistical mixture.

The remarkable insight underlying the 2025 Nobel Prize discovery was that Clarke, Devoret, and Martinis demonstrated that quantum coherence could persist in macroscopic systems, where billions of electrons behave cooperatively. Their superconducting circuits cooled to millikelvin temperatures maintained coherence across macroscopic distances and timescales, proving that quantum mechanics was not confined to the atomic realm but could be engineered into observable-scale systems.

The Physics of Maintaining Coherence in Macroscopic Systems

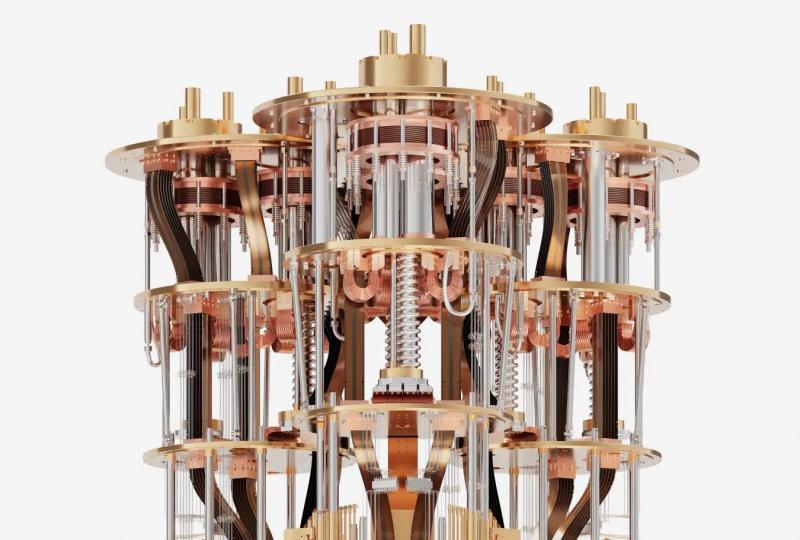

The key to achieving macroscopic quantum coherence lies in superconductivity—a phenomenon where certain materials, when cooled below critical temperatures, lose all electrical resistance. In a superconductor, all the conduction electrons condense into a single macroscopic quantum state described by one unified wave function. This condensation is itself a macroscopic quantum phenomenon, creating conditions where individual electron behavior becomes irrelevant; instead, billions of electrons move in perfect synchronization.

Within this context, the Josephson junction—the centerpiece of the Berkeley experiments—functions as a quantum-mechanical device where Cooper pairs of electrons tunnel coherently across an insulating barrier separating two superconductors. What makes this remarkable is that the phase relationship characterizing this tunneling extends across the entire circuit, not just at the junction itself. The entire electrical circuit becomes described by a single macroscopic phase, meaning billions of electron pairs act as one quantum object.

The coherence achieved in these systems persists because of two critical factors. First, superconductivity itself eliminates energy dissipation—there is no resistance to destroy quantum phase relationships through heating. Second, operation at near-absolute-zero temperatures (below 20 millikelvin) suppresses thermal excitations that would otherwise induce decoherence. Under these conditions, the quantum phase can rotate coherently, and the system can maintain superposition between macroscopically distinct states.

Quantum Coherence Time: The Critical Metric

In quantum computing and quantum research broadly, coherence time—measured as either T₁ (relaxation time) or T₂ (dephasing time)—represents perhaps the single most important performance metric. Coherence time quantifies how long a quantum system can maintain its quantum properties before environmental interaction destroys them, forcing the system into a classical state.

For contemporary superconducting qubits, coherence times typically range from 50 to 300 microseconds, though leading implementations have pushed this into the millisecond regime. This seemingly modest duration creates an extraordinary constraint: quantum algorithms must complete all their operations within this window. If a computation extends beyond coherence time, decoherence transforms the quantum information into inaccessible environmental degrees of freedom, rendering the computation unreliable or meaningless.

The relationship between coherence time and quantum computing capability is not linear but exponential. Longer coherence times enable not only more operations but also fundamentally different computational possibilities. A qubit with 100-microsecond coherence might execute perhaps 100 quantum gate operations (each taking ~1 microsecond) before decoherence intervenes. A qubit with 1-millisecond coherence can perform orders of magnitude more operations, enabling substantially more complex quantum algorithms.

Different qubit modalities achieve dramatically different coherence times. Trapped ion qubits, which encode quantum information in internal atomic states, can maintain coherence for seconds or even minutes due to their inherent isolation from environmental noise. Photonic qubits (using properties of light particles) maintain coherence indefinitely during propagation but face other challenges in manipulation and storage. Superconducting qubits sacrifice coherence time for speed—they execute quantum gates in nanoseconds, compensating for shorter coherence through faster operations.

Decoherence: The Inevitable Challenge

Understanding quantum coherence requires understanding its nemesis: decoherence. This process occurs when a quantum system unavoidably interacts with its environment, becoming entangled with environmental degrees of freedom in such a way that quantum information leaks into inaccessible channels.

Decoherence is not the same as classical noise. Classical noise introduces random errors—bit flips among classical information. Decoherence transforms quantum superposition itself into classical mixtures; the quantum phase relationships that encode information become scrambled into the environment, and no measurement can recover them. This represents a fundamental loss of quantum information, not merely an error in classical bits.

The mechanisms inducing decoherence in superconducting qubits include thermal excitations (rare quasiparticles), electromagnetic noise from control circuitry, radiation from the environment, and materials defects in the superconducting circuits themselves. Reducing these noise sources requires extraordinary engineering: dilution refrigerators maintaining temperatures at 10-20 millikelvin, electromagnetic shielding protecting circuits from stray fields, careful materials science to eliminate defects, and sophisticated pulse control to avoid unintended excitation.

The Critical Role of Coherence in Quantum Computing

Quantum advantage—the fundamental promise that quantum computers can solve certain problems exponentially faster than classical machines—depends critically on coherence. Quantum algorithms exploit two quantum phenomena: superposition (qubits simultaneously exploring multiple computational paths) and entanglement (correlations between qubits that enable collective quantum effects). Both phenomena are exclusively quantum in nature and require maintained coherence to yield computational benefit.

Without sufficient coherence, quantum computers degrade to classical behavior. Decoherence collapses superposition, eliminating the quantum parallelism; it destroys entanglement, removing the correlations necessary for quantum speedup. The consequence is that the quantum computer produces unreliable results—no different from performing randomized classical computation.

This explains why coherence time appears in virtually all quantum computing performance specifications. Google's Willow quantum processor, announced in late 2024, achieved historic quantum error correction breakthroughs through improvements in coherence time: qubits with mean T₁ of 68 microseconds and T₂ of 89 microseconds, permitting the system to execute complex error-correction protocols that protect quantum information.

Coherence, Error Correction, and the Path to Fault-Tolerance

Quantum error correction, the mechanism necessary for scaling quantum computers beyond a handful of qubits, fundamentally depends on maintaining coherence. Error correction works by distributing quantum information across multiple physical qubits—if one qubit experiences an error, the information remains encoded redundantly across others, allowing the error to be detected and corrected without directly measuring the quantum state (which would destroy it).

However, quantum error correction introduces significant overhead. Multiple physical qubits must work cooperatively, maintaining their entangled superpositions across the entire error-correction cycle, which can involve dozens or even hundreds of operations. Each operation consumes coherence time; if decoherence occurs before error correction completes, the protection fails.

The quantum threshold theorem establishes that error correction becomes effective only when physical error rates drop below a critical threshold—typically 10⁻⁴ to 10⁻³. Below this threshold, each increase in code distance (more qubits used for error correction) yields exponential reduction in logical error rates. Google's Willow demonstrated this behavior in 2024, showing that increasing code distance reduced logical error rate by more than half for each additional code distance unit.

Recent breakthroughs in algorithmic fault tolerance (AFT) discovered that quantum algorithms can be restructured to perform error detection continuously during computation rather than at fixed intervals, reducing error correction overhead by up to 100 times in simulations. Yet even these remarkable advances rely fundamentally on maintaining coherence long enough for error correction to complete.

Coherence Beyond Computing: Quantum Sensing and Metrology

While quantum computing represents the most celebrated application of macroscopic quantum coherence, the phenomenon enables equally transformative technologies in quantum sensing and metrology. Quantum sensors leverage coherence and entanglement to achieve measurement precision beyond classical limits.

John Clarke himself pioneered this application, developing SQUIDs (superconducting quantum interference devices) that use Josephson junctions to create sensitive magnetometers. A SQUID operates by maintaining phase coherence across a macroscopic circuit; magnetic fields alter this phase, encoding field strength into the circuit's state. Because the coherence is macroscopic, involving billions of particles, the device achieves extraordinary sensitivity—capable of detecting magnetic fields trillions of times weaker than Earth's magnetic field. Such sensitivity enables applications in geophysics, biomagnetic imaging, and fundamental physics tests.

Quantum sensors more broadly require coherence to create entangled states that surpass classical uncertainty limits. An unentangled measurement chain follows classical scaling, where uncertainty decreases as one over the square root of sample size. Entangled quantum sensors achieve Heisenberg scaling, where uncertainty decreases proportionally to sample size—orders of magnitude better for large systems.

The 2025 Nobel Prize: Recognition of a Paradigm Shift

The 2025 Nobel Prize thus represents recognition of a fundamental paradigm shift in quantum physics. For decades after quantum mechanics' formulation in the 1920s, coherence appeared to be an exclusively microscopic phenomenon—the domain of individual atoms and particles. Macroscopic objects, composed of trillions of particles, were thought to inevitably decohere, their quantum properties washed away by environmental interactions.

Clarke, Devoret, and Martinis experimentally destroyed this assumption. By demonstrating that entire electrical circuits containing billions of electrons could maintain quantum coherence—exhibiting tunneling, superposition, and discrete energy quantization at macroscopic scale—they established that the quantum-to-classical boundary was not fundamental but rather an artifact of engineering constraints.

This discovery opened an entirely new research domain: engineered macroscopic quantum systems. Instead of accepting decoherence as inevitable, researchers learned to combat it through materials science, cryogenics, circuit design, and quantum control. The result transformed quantum mechanics from an abstract theory describing atomic phenomena into a practical technology platform.

The magnitude of this transformation becomes apparent in contemporary quantum computing. Every superconducting qubit in use today—from Google to IBM to China's quantum computing initiatives—operates on principles directly descended from the 1984-1985 Berkeley experiments. These systems maintain macroscopic quantum coherence through careful engineering, and their performance is directly limited by coherence times.

Current Frontiers and Future Challenges

Extending coherence time remains one of the most actively pursued research objectives in quantum science. Current efforts focus on multiple approaches: improving materials purity to eliminate defects, developing new circuit architectures that reduce environmental coupling, advancing cryogenic technology to achieve yet lower temperatures, and engineering environments to induce non-Markovian dynamics where coherence decay slows through constructive environmental memory effects.

Simultaneously, researchers pursue alternative qubit modalities offering intrinsically longer coherence. Trapped ions maintain coherence for minutes or seconds but face challenges in scalability and speed. Topological qubits promise exponentially longer coherence through topological protection but remain in early experimental stages. Neutral atoms, positioned between superconducting and trapped-ion approaches, offer recent breakthroughs in demonstrating long coherence with potential for scalability.

The vision driving these efforts was articulated in 1984 by Clarke and his collaborators: if quantum mechanics could be maintained at macroscopic scale, entire new technological possibilities become accessible. Forty years of subsequent research has vindicated this vision. Quantum coherence, once thought impossible to maintain in large systems, is now engineered routinely in contemporary quantum computers. The 2025 Nobel Prize honors this achievement and marks a watershed in physics and technology—the moment when quantum mechanics transitioned from theoretical curiosity to practical engineeringeering discipline.

Featured Content